Google has just release performance data for its Tensor Processing Unit (TPU) custom machine learning chips.

TPUs are

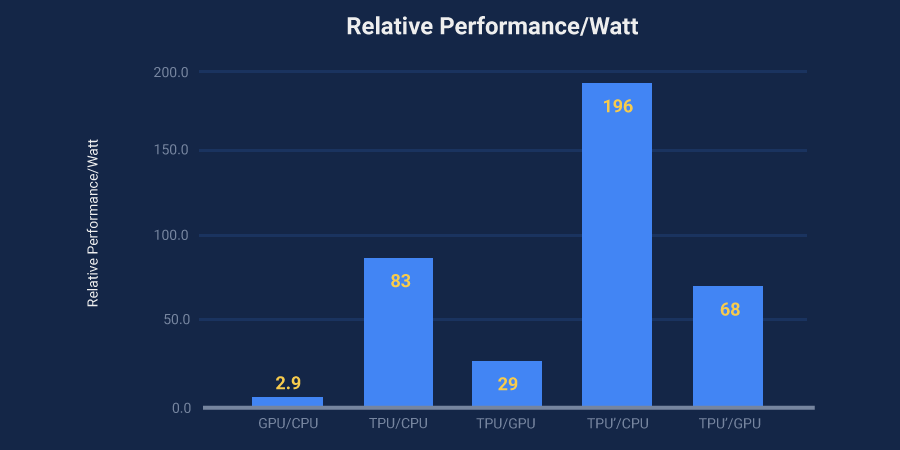

- 15-20x faster than GPUs and CPUs when running deep learning applications

- 30-80x more power efficient (operations per Watt)

- frugal in terms of lines of code required to control them (their deep learning applications are implemented using 100-1500 lines)

Google claim that without these chips they would have to double the number of data centers they run.