I recently conducted an inter-rater reliability study for a client. There was some confusion about what this measures.

Inter-rater reliability measures agreement. It’s a measure of precision, not accuracy. As anyone who’s been on social media knows, it’s possible for everyone to be in complete agreement, yet utterly wrong.

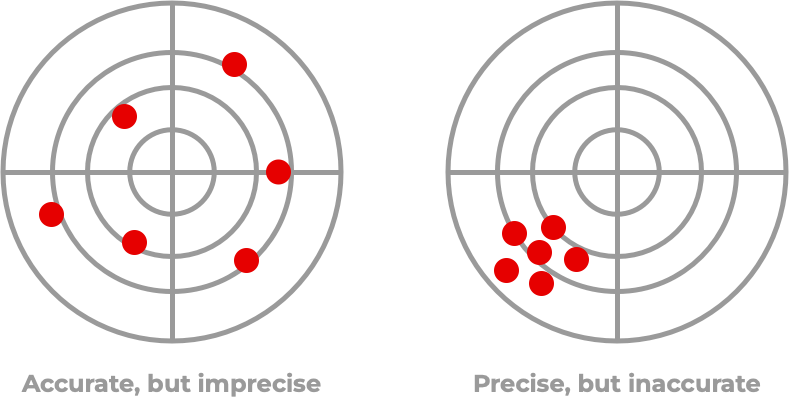

The following diagram summarises the difference between precision and accuracy.

Precision indicates whether the assessments are similar. If multiple people access the same service, and give the same ratings, your survey is precise. There isn’t a lot of variability in precise ratings.

However, it doesn’t mean they are accurate. Systemic bias leads to high precision, but low accuracy. Racism, sexism, domain specialism, etc. can cause biased, but precise, scores. Accuracy measures whether assessments are correct on average. It’s harder to gauge in complex domains, as it’s not always clear what is being assessed. However, it’s more important than precision.

We’d rather be generally correct than specifically wrong. Of course, we want ratings that are both precise and accurate. Inter-rater reliability only addresses the less important half of the problem.